AI solutions

What we do

Services

Experts in

How we work

When a data analytics platform fits users’ needs, it makes analytics workflows smoother and business goals easier to reach.

We say this as a team that has built platforms to do just that. For example, our client Sparrow Chart pulled in 1500+ new users in a niche market in just a few months after we redesigned their platform, adding new features and implementing custom interactive charts and dashboards as part of our data visualization services.

Another client, a telecom broker, used to slog through days of manual data matching each month. Now the same process takes minutes thanks to a solution we designed to handle the heavy lifting.

Every project we take on is as unique as the business it serves, and after building 10+ data analytics platforms, we’ve developed a roadmap that works. If you’re wondering how to build a data analytics platform — the process, the costs, or if it’s possible to create what you need — you’re in the right place. We’re about to tell you everything.

Here is a rundown of how the process usually goes:

Everything seems pretty straightforward, right? But what’s important for a smooth and successful development process is the details — what’s done at each stage, what features are chosen to target each of your needs, and how they are executed by the development team. Let’s get into each stage to explore these details.

The project discovery stage is all about understanding what you’re after and considering each part of your platform so it aligns with what you want to achieve. It’s like framing the house before the walls go up — with a solid frame, everything else falls into place.

Here’s what happens during this stage:

Everything starts with a few meetings with you to find out what you’re aiming to achieve, what you’re struggling with, and what you want to see in your platform.

Along with figuring out your vision for the platform, we dive into specifics. What data do you want to collect and analyze? What data sources do you need to connect? What are your data visualization and reporting needs?

This step is where we shape the general picture so that every next step we take is aligned with your wants and needs.

Next, it’s time to focus on details by:

The goal is to cover every requirement so nothing is missed once we’re at the development stage.

Once we figure out these details, you’ll get an app requirements document or software requirements specification — a full description of your product with all requirements in one place. It’s proof that we’re on the same page with you and serves as a guideline for developers on what to build.

With requirements in hand, we’re ready to pick the tools, frameworks, and architecture that will make your platform run smoothly. Every platform’s a bit different, so we look closely at details like the kind of data you work with, your security needs, and any special requirements for importing or exporting data.

Here are a few examples:

Do you need to automatically pull in data from outside sources? We’ll explore documentation on relevant APIs and build a proof of concept first to see if there are any limits or corner cases to watch out for.

Have existing data to migrate to the new platform? The type and amount of data will impact how we design the database and set up the architecture. We’ll make sure all your data fits seamlessly into the new platform.

Your platform’s safety is non-negotiable. We always consider measures like role-based access, two- or multi-factor authentication, and data encryption. And if your platform handles sensitive data such as healthcare data we can go further, complying with HIPAA or other requirements.

This is just a small portion of what we plan — you’ll get a full architecture design at the end of this step. Basically, we make sure every technical choice is in line with your needs so your platform is reliable, secure, and ready to perform as you want it to.

This is where we map out the user experience and design structure.

Do you want your platform to feel intuitive and look polished? So do we, which is why we consider each element, from buttons and fields to data visualizations and dashboard layouts.

We’ll show you a UI/UX prototype once it’s ready so you can make sure the platform looks how you want it to and fits naturally into users’ workflows.

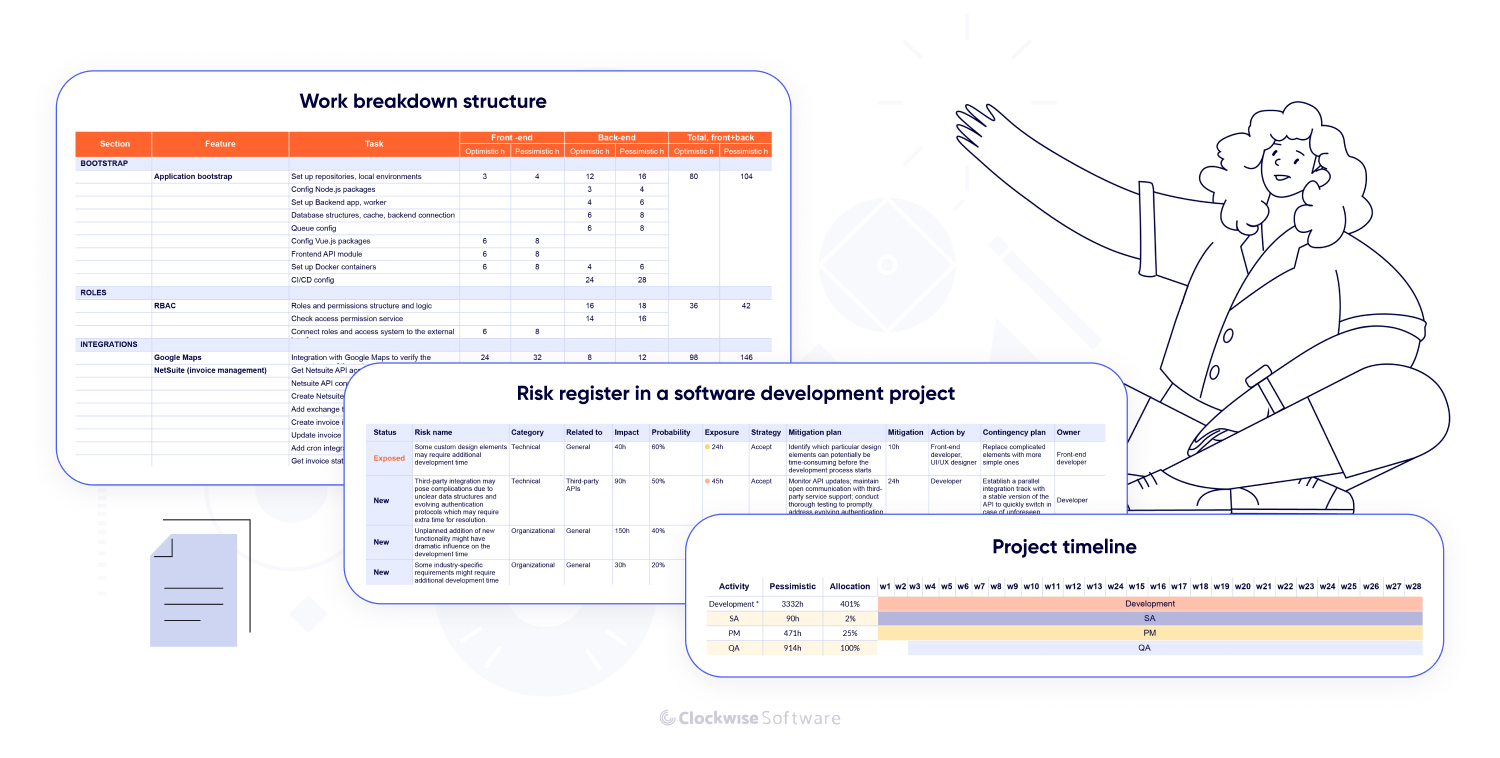

Finally, we build a clear plan that gives you both timeline and budget estimates. Here’s how you’ll see it come together:

With the app requirements document and UI/UX prototype we mentioned before, you’ll have a full picture of what your platform will look like, how it will work, and how you will bring it to life.

The discovery stage usually takes at least 3 weeks and starts at about $12,000. How long it takes and what it costs depends on a few things like the size of your project, any prep work you’ve already done, and the specific deliverables you’re looking for.

For bigger or more complex projects, this stage might stretch to 8 weeks and cost upwards of $25,000. It all comes down to what your project needs to get off to the best possible start. Here is an example of 3 discovery stage packages we offer our clients:

| Small package | Medium package | Large package | |

| Delivery time | 3 weeks | 5 weeks | 8+ weeks |

| Cost | $12,000+ | $16,000+ | $25,000+ |

Once we know exactly what the platform needs to accomplish, it’s time to start development. Here’s what the development team tackles when building an analytics platform:

First comes the initial preparations behind the scenes: setting up version control, creating code repositories, configuring development environments, and putting continuous integration pipelines in place. Think of it like setting up your workspace — all the tools you use for creating a functional product need to be in place to ensure a smooth process.

Next, we dive into building functionality:

We break this work into 2- to 4-week iterations. Each iteration focuses on building a few features from start to finish. And after every iteration, we test what’s been built to make sure it does what it’s supposed to.

Once we’ve made solid progress (let’s say about 50% of the features are done), it’s time to migrate data. If you have existing data, we make sure it’s brought into the new platform cleanly and accurately.

We also work on integrations so you can pull in data from external sources or use third-party tools right in your platform.

After all the features are built, we start regression testing. This is where we make sure that your platform operates as expected after we combine all pieces of functionality.

Then we move to stabilization; this step is like polishing the platform. We fix any bugs, fine-tune performance, and optimize how the platform handles your data so everything runs smoothly.

User acceptance testing is where you and your team step in. You’ll get your hands on the platform, test it out, and tell us if anything doesn’t feel quite right. We take your feedback seriously and make fixes as needed to ensure your platform meets your expectations.

Finally, it’s time to set up the platform for actual use. After deployment, we usually do one last round of checks just to be sure everything’s perfect. Then, your data analytics platform will be live and ready for use.

The best part of custom software development is that we can tailor everything to fit exactly how you operate.

Want a built-in report constructor? No problem.

Need it to integrate with your CRM or ERP? We can do that.

Whether you need fresh analytics to pop up every Monday or data visualizations that make your priority metrics easy to spot, it’s all doable. Just let us know what you want to see on your platform.

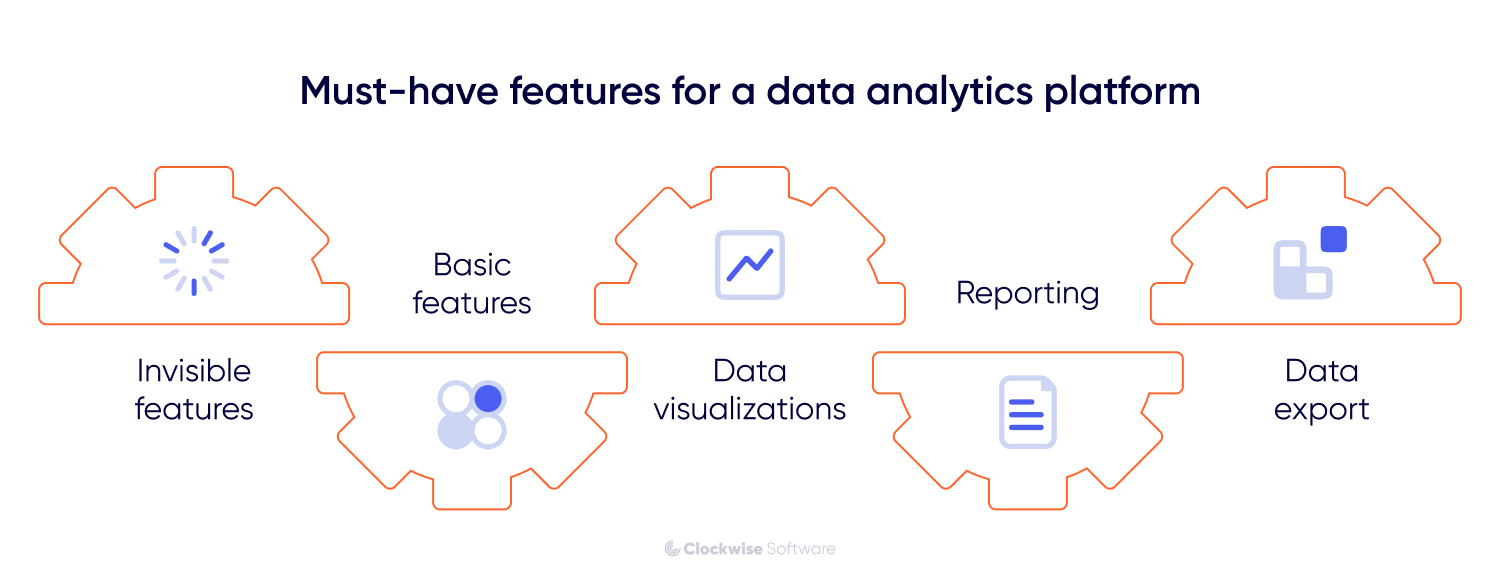

But let’s talk about the essentials for every data analytics platform — these are the building blocks we’ll shape and configure to match exactly what you need. We break them into 4 groups.

Let’s start with features that work quietly behind the scenes and make everything on your platform possible. Without these, your platform is just a pretty interface with no actual utility:

The tools and services to build your own analytics platform can be different — there are lots of options for each case and need. You can read more about the options, along with a bunch of other nuances around building analytics software, in our white paper.

Meanwhile, let’s move to the features you can actually see and use. We’ll start with the most basic.

These features don’t perform the core functions of your platform but are essential for smooth day-to-day use. They’re the kind of features you might not notice until they’re missing:

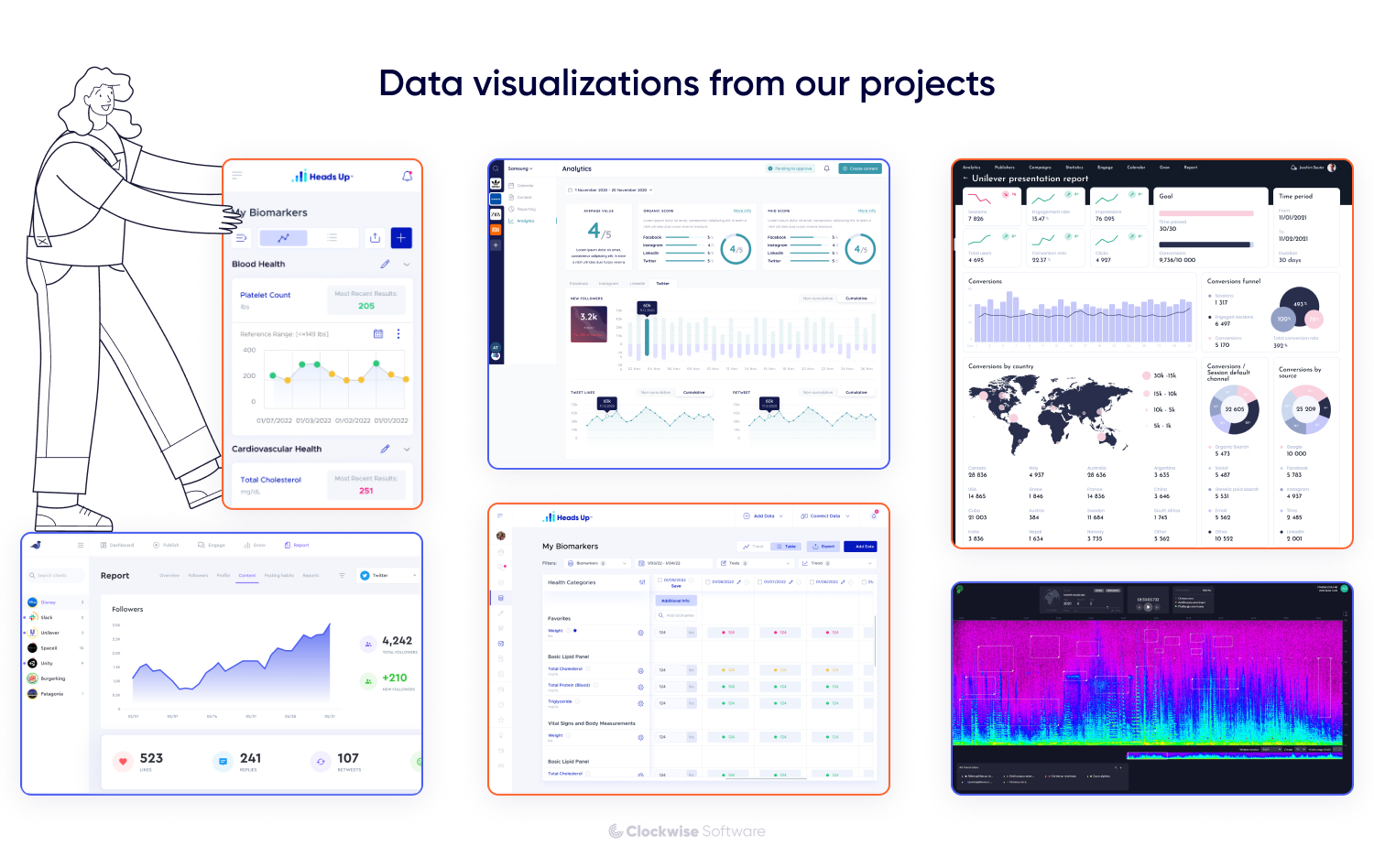

Data visualizations really make your data analytics platform come to life. This is where all that raw data is turned into something you can actually understand and act on: dashboards, tables, diagrams, heatmaps, spectrograms — you name it.

And these are not one-size-fits-all visualizations. Customization is key. Over the years, we’ve built a wide range of visuals tailored to our clients’ needs.

Take Sparrow Chart, for example. We not only created customized spline and line charts for our client to monitor social media data over time but also took care to add smooth animations for chart updates. This is much better than if the chart needed to re-render each time new data came in, right?

Rainforest Connection, a non-profit organization sponsored by Google, had a special request: visualizing audio by creating spectrograms. We implemented interactive spectrograms, allowing users to zoom in on specific frequencies.

One more example of data visualizations is Heads Up Health — a health tracking solution where a big part of our work involved creating diverse tables and graphs:

Whatever your business needs, there’s a way to turn your raw data into something actionable, insightful, and easy to use.

When building these features, we use tools like D3.js and Chart.js. For more robust platforms, we might integrate Tableau or Power BI directly into the system. These tools let us create stunning, highly interactive charts that update in real time as your data flows in.

Lastly, your platform needs reporting and data export features to make it easy to share insights with others. Again, you can choose from diverse features to get a convenient and effective tool. Just focus on your needs.

Need to minimize time spent on creating reports? A report constructor or ready-made templates can help. We created this feature as part of an update for Sparrow Chart, and it resulted in the product finding market traction and gaining 1500+ new users in the first months.

Does your team need multiple export formats? We can make your platform support CSV, XLS, PDF, and other formats — just tell us what you need. For example, when building a platform for a telecom provider, we enabled users to export data from the dashboard in CSV and XLS formats, making it easy to view, edit, and share data within other apps.

To give you a ballpark figure, building a data analytics platform typically costs between $100,000 and $200,000 and takes 6 months or longer. If you’re adding advanced features like AI-powered search or complex analytics algorithms, the price tag could be higher.

Let’s say you’re creating a data analytics platform for social media management (SMM). Here’s a rough breakdown of the functionality you might include, along with time and cost estimates:

We recommend developing a minimum viable product (MVP) first. You don’t need to build a data analytics platform with every possible feature at once; just focus on the core features your audience really needs. An MVP lets you launch faster, test your idea with end users, and reduce software development costs. Once you have the basics working, you can add more features over time (we’ll tell you about this in the next stage).

Once your platform is built and launched, the job isn’t over — it’s just the beginning of the post-release stage.

We are here for all of that. We’ll discuss what you want to implement next, agree on the scope of work, and get busy.

Here’s an example: We worked on a lead analytics system that started slowing down as more users and data piled in. The system owner wanted to add new features but worried that would make the platform even slower.

Our solution was to redesign the platform with a new front-end tech stack. We replaced the old Angular.js code with Vue.js and even gave the system a fresh user-friendly design. We released the changes gradually so that existing users didn’t notice a thing — except for an 8x speed boost.

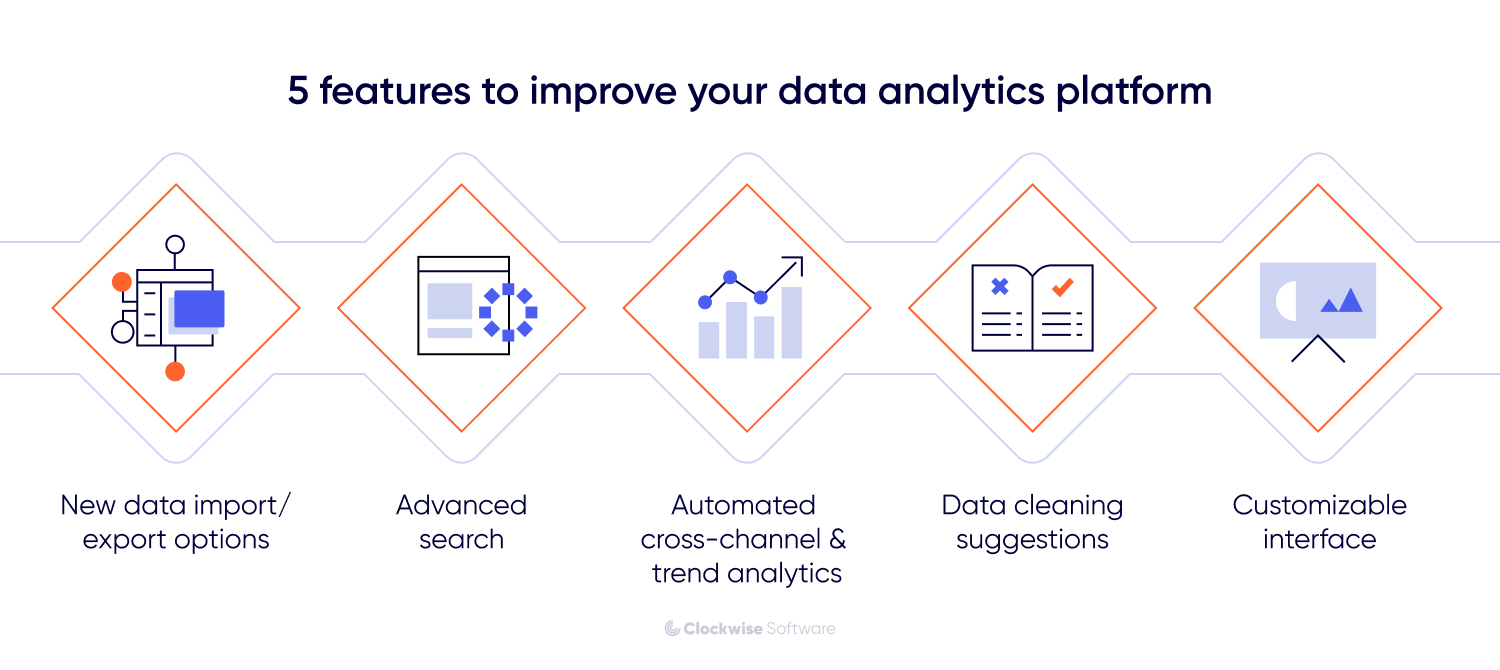

How you improve your platform depends entirely on your vision and goals; it can be a small tweak or a major upgrade. To spark some ideas, let’s walk through a few examples of valuable improvements you can make once your initial version has been up and running for a while.

Here are a few upgrades that can have a big impact:

New data import/export options

If you focused on integrating with core external tools when building an MVP, you can now expand the ways you bring in and share data. Configuring the system for new data formats, integrating with additional sources, or creating new export options can make the platform more comprehensive and convenient.

Better search tools can save time and make it easier to find what you need. For example, we could add predictive search or filters that let you dig deeper into specific data sets, like pulling up trends by campaign or region.

Enhance your platform with new analytics options — for example, to easily spot trends among historical data or simplify cross-channel analysis for multiple clients.

We worked with Attention Experts, a top Australian marketing agency, to automate their cross-channel analysis. Attention Experts used complex custom formulas in Excel and manually updated every metric monthly — a time-consuming and error-prone process. We created algorithms that reflected their formulas, enabling their analytics platform to handle everything automatically, from pulling in fresh data every month to analyzing and visualizing it.

A feature that flags inconsistencies or missing data will boost accuracy and reduce time spent on manual checking. It’s a small upgrade, but it makes a big difference in keeping your data reliable.

You finally have user feedback, so it’s a good time to improve the user experience. Interface customization is a great way to do this: give users an option to hide features they don’t need, rearrange dashboards, or customize their views based on their specific roles.

These upgrades focus on saving time, improving data accuracy, and tailoring the platform to how you actually use it. But there are many other ways to improve your platform — just focus on what will help you achieve your goals.

Costs for this stage can vary a lot; it all comes down to how much you want to tackle at once and how quickly you want it done.

For example, if the scope of work is small or you’re not in a rush, a small team can handle it at a steady pace, keeping costs on the lower side. If you’re aiming for a big upgrade or need things done quickly, we’d assign a larger team to work faster. Naturally, this increases the monthly budget, but it gets the job done sooner.

Here’s an example of how a monthly budget might look based on the team allocation.

| Team composition | Monthly budget |

|

$12,000 |

|

$48,000 |

| * On-demand involvement | |

When it comes to hiring a team to build an analytics platform, you have multiple options. Of course, if you have an in-house development team, you can kick off the project with them. But if you don’t, consider choosing a software development outsourcing company to help you build your tool.

We offer two cooperation options: product development and dedicated team.

With this option, you hand over the entire development process to us. We take care of the full software product development lifecycle, from gathering your initial requirements to project discovery, design, development, testing, and post-launch support.

This is a great option if you'd rather focus on your business while we handle all the tech. You get regular updates and sign off on major decisions, but we take care of the day-to-day development work.

You can trust that we’re building your data platform right, and you won’t have to worry about managing developers or technical aspects of the project.

If you already have a team but need to fill in some gaps — whether that’s adding more developers, increasing your speed, or accessing specific expertise — this option is the way to go. You get to keep control of your project while we provide our specialists to join your team.

Hiring dedicated software developers can be nerve-wracking, but we make sure we fit right in with your workflow, keep communication clear, and stay on track. If you have some concerns about alignment and quality, we’ve got you covered with a track record of successful collaborations.

Take our partnership with HeadsUp Healthcare. Our developers and QA engineers have been helping their in-house team to build and improve the product since 2019, and our cooperation continues to grow.

Building a data analytics platform is a big step, and it can feel like there’s a lot to figure out. But with the right approach, it is a manageable and rewarding journey.

Not sure which technical solution will best fit your needs? Starting with software development consulting or project discovery is a smart move. This helps you lay the foundation for development right from the start, ensuring that your platform is built on solid technical ground.

Already have a clear vision and plan in mind? Great! You can gather the team, move into development, and start bringing your idea to life.

We are ready to help with whatever stage you’re at. If our expertise feels like the right fit for your project, let’s start with a free call.